I've been trying to think of ways to use ChatGPT to make my life easier as an Oracle DBA. So far I haven't really found anything game-changing but have managed to find a few things that have made certain things easier.

Coding / Scripting / SQL

The most obvious thing is getting ChatGPT to write SQL and code. It is really good, but doesn't always get things 100% correct. One piece of code told me to use a DBMS package that doesn't exist. Another did a select on a table column that wasn't there.

But it is generally very good. In the past if I wanted a script or SQL I'd Google, find something that was nearly correct, hack it about and would need to go back and forth between Google, changing the script, testing it, going back to Google etc and it would take quite a while.

With ChatGPT you can do it all in the same place and prompt it for enhancements.

There's a Python script I got it to write: We have monitoring set up at our client sites, both shell scripts, SQL and OEM. These send us emails every time they run. A long time ago we settled on a standard subject line: CLIENT:HOSTNAME:DBNAME:METRIC:RESULT.

So something like WIDGETCO:PROD1:PRODDB:RMAN BACKUP:SUCCESS

Someone manually goes through these emails every day to look for WARNING or CRITICAL errors and checks them off on a spreadsheet to make sure we get the emails we're expecting.

I asked ChatGPT if there was a way to read my mail and look for the subjects that contain the words "SUCCESS","WARNING" and "CRITICAL" for the last 24 hours. It gave me a python script which I ran. I then asked it to make it 72 hours (to cater for weekends), which it did. I then told it to remove duplicates. Then to colour code them. Then to add the time the email was received. And so on. Eventually it gave me exactly what I wanted. I just run the script every day in a command prompt window and it gives me this

I won't post the script here, it might not serve your purpose, but all you need to do is go to ChatGPT and ask it to create what you want. "Can you write a script that connects to my email and lists all subject lines over the last 72 hours that contains the words "SUCCESS","SUCCEEDED","FAILED","WARNING","CRITICAL" and colour code them..."" etc.

You can run it and ask ChatGPT to enhance it as you go.

Obviously you can also ask it to write SQL for you instead of searching Google. I often just ask it stuff like "write an Oracle SQL script to...." and it does it.

It can also fix SQL. I was given some scripts by a client and one of them failed with ORA-00920. If I used Google it would tell me what the issue was, and I'd have to figure out where in the SQL it was breaking. Instead, I just pasted it into ChatGPT and told it what was wrong "this fails with ora-00920, fix it" and it gave me the correct code.

9 times out of 10 if you ask ChatGPT for a script it will give you something that works. If it isn't exactly how you want it, just tell it and it will re-write if for you. Saves so much time and messing around.

It can also help with stuff that might take ages to Google and get something that works. Here are some examples I've used.

A client wanted yesterday’s alert log data. The alert log

goes back to May 2020. I edited the log, searched for the current date and it took

me to the first entry for that day. So I want to just extract from this line to the end.

How do I extract a section of

a unix file from line 7717529 to the end?

It gave me 3 examples, I used this

tail -n +7717529

filename > alert_log_extract.txt

Another example:

how to estimate the rman

backup size of an oracle container database?

It gave me some SQL, but I wasn’t sure if that included

the pluggables, so asked

does this take into account

multiple pluggable databases?

And it gave me better SQL.

Formatting output

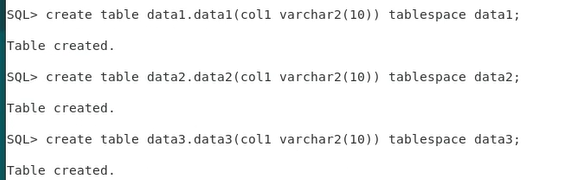

We're currently doing some migrations using XTTS, and there is a config file that needs the tablespace names on one line separated by commas, and there was about 130 tablespace names. I ran 'select tablespace_name from dba_tablespaces order by 1;' and it created the list as a column, as you'd expect:

TABLESPACE_NAME

TBS1

TBS2

TBS3

TBS4

etc.

I went to ChatGPT and said "make these comma-separated all on one line" and pasted in the output, and it did it. But it left a space after each comma so I said "remove the space after the comma" and it did. So I just copied and pasted the output into the config file. Again, so much time saved.

Another neat trick I found - a client sent a screen shot of a table. I wanted it in CSV format so I could paste it into an Excel spreadsheet - of course, ChatGPT could do it. Something like this

So I pasted it into ChatGPT and asked for it in csv format and it did, so I could copy and paste it into Excel.

Just ask it stuff

Some more examples of what I've asked it

what are the deprecated features between oracle database v11 and v19c

Which PL/SQL packages are deprecated in 23C

What are the hard limits for Oracle Table size, index size, number of extents, maximum number of tables, maximum number of indexes, sequence hard limits or any other hard limits that we need to be aware of.

What licence options are required for Oracle AWR

how to check the oracle timezone file in use

how do i change this

can I get a time from an oracle scn

show oracle disabled or invalid constraints

Write a script to find all SQL taking more that 10 seconds for Oracle 19C using v$ tables including the SQL statement, number of executions and runtime

Basically ChatGPT has made a lot of things easier for me, especially getting it to write a command or script to do something that I only need to do once. Before, I'd be going back and forth with Google; ChatGPT usually gives me something I can use immediately, and if it isn't quite what I want it can give me something else in the same session.