After having created an Oracle database on

AWS I thought that there wasn't as much flexibility as having a Linux host - no

SQLPlus, I couldn't get APEX ORDS to work, no cron etc.

So I thought I'd create a Linux instance

and do it that way. One of our clients is doing this, and when we connect it's

just a putty connection to a Linux host - you wouldn't know it was in AWS.

Before you go too far, download

'puttygen.exe' from

you'll need it

later.

So, log into the AWS console.

Select 'EC2' at the top of the page.

At the next page, click on 'Launch Instance'

Click on 'Select' against the Red Hat Instance

Make sure the 'General Purpose' one is checked, it's the free one

Click on 'Review and Launch'. If you select

'Next: Configure Instance Details' it goes through options, all of which will

cost money, so you probably don't want to.

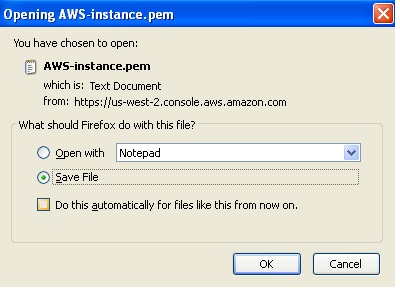

Give it a name, then click on 'Download Key

Pair'

Click on 'Launch Instances'

A page will show it creating, then you will see this

You will see a 'Public DNS' - this is the

address you use with Putty. However, the PEM file generated from the 'Create a

new key pair' doesn't work with Putty, so that's why you need Puttygen.

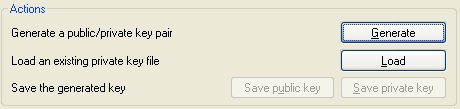

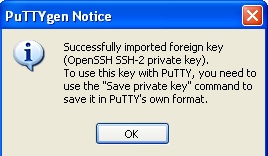

Start puttygen.exe

Make sure'SSH-2 RSA' is selected at the

bottom

Click on 'Load'

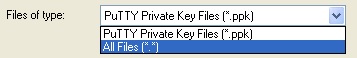

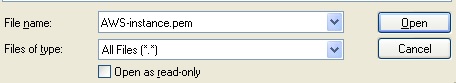

Use the drop-down to select 'All Files'

Locate the pem file you generated and click

'Open'

A couple of windows will open - click 'OK'

at this one

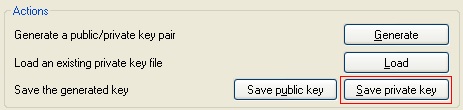

Then click on 'Save Private Key'.

Click 'Yes' here:

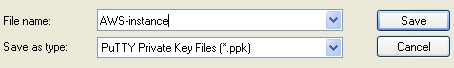

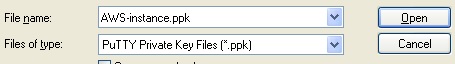

Save the file as a 'ppk' and call it the

same name as the PEM file and click 'Save'

You can now close the puttygen window.

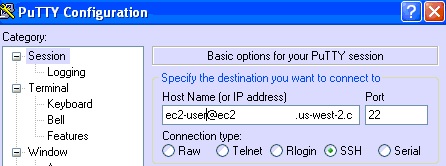

Open Putty, and create a new session.

In the host name field, enter ec2-user@xxxxxx.xxxxx.xxxxx.xxxx

where the xxxx's are the Public DNS from

the AWS console

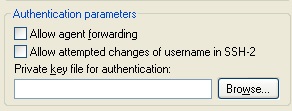

Expand the 'ssh' in the left-hand Window

and expand 'Auth'

Browse to the ppk file you generated with

Puttygen

And click 'Open'

Click on 'Session' and then on 'Save',

giving it a name

Then click on 'Open'.

Click 'Yes' at the security alert screen

You should be logged in, it won't prompt

for a password

Note that to do anything as root you need

to use 'sudo'

So that’s it, you now have a Red Hat Linux

host. I was going to do another document on how to install Oracle, but it’s the

same as installing Oracle on any Linux host so just scp the installation files to the host and follow the Oracle

documentation.