So, I've done a couple of these now, and basically it's export / import with a few other things to be aware of.

You need the requestor to provide:

·

Source host

·

Source database name (SID)

·

Source Schemas to be exported

·

Source dba username / password if we don’t have

a record

·

Target host (AWS RDS endpoint)

·

Target SID

·

Target dba username / password if we don’t have

a record

If Going From Enterprise Edition to Standard Edition

The on prem database might be EE, but the target RDS is SE.

Make sure you check, and export with no EE parameters (compression for

example), and check for partitions. If the source database has partitioned

tables you will need to use the PARTITION_OPTIONS=DEPARTITION parameter on

import. This will import the partitions into multiple tables which you will

then need to merge into one or create a view on (check with the requestor to

see what they prefer).

To merge into one

create table

owner.table_name as (select * from

table_name_p1)

union (select *

from table_name_p2)

union (select *

from table_name_p3);

etc

Where p1, p2 and p3 are the names created by the import.

You’ll also need to create the grants and indexes.

If you have to turn compression off, the export file will be 10x bigger and take a long time to transfer, but there’s no way around it.

Connect to the on prem host and run the export

RDP or ssh to the on prem host, depending on whether it's Windows or Unix.

This example is Windows.

open up a command prompt

set

ORACLE_SID=SOURCE_SID

sqlplus

dbauser/password <<

you will need a DBA account

select * from

dba_directories;

There may be an obvious migration data pump directory, use

that. If not, check the space in the data_pump_dir first.

Exit SQLPlus and run the export, check the parfile and

change as required, change the name and date on the files

DUMPFILE=SOURCE_SID_FULL_%u_11JULY.DMP

LOGFILE=SOURCE_SID_FULL_11JULY.LOG

SCHEMAS=SCHEMA_1,SCHEMA_2

DIRECTORY=DATA_PUMP

PARALLEL=16

COMPRESSION=ALL << If the destination is

Standard edition, don’t use this

FILESIZE=5G

expdp

dbauser/password parfile=exp_on_prem.pf

In Windows explorer:

Navigate to the directory you

used to dump the file and check the log.

So far, so usual. Now comes the messy bits.

Transfer the files to the AWS data pump dir

The process is to create a database link in the source database to the target, then use dbms_file_transfer to send the files to the AWS RDS instance.

You need to login to the source database and find out the

database link name, or create it if it isn’t there

create database link

TO_AWS_RDS_SID connect to masteruser identified by “xxxxx” using '(DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=)(PORT=1521))(CONNECT_DATA=(SID=TARGET_SID)))'

Test it, and verify the info with the requestor to make sure

it is the right one. You don't want to overwrite a database that's in use because the import is going to use 'table_exists_action=replace'.

If the link doesn't work, you need to contact the people looking after AWS Security groups and get them to allow the connection - it may be the port, it may be the source host isn't in the whitelist or IP range.

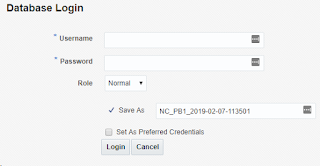

Check the files in the data pump directory on the RDS

instance – ask if you can clear out any old ones – log in with SQLDeveloper and

run this to list the files in the data_pump_dir on the AWS instance.

select * from

table(RDSADMIN.RDS_FILE_UTIL.LISTDIR('DATA_PUMP_DIR')) order by mtime desc;

Delete any old dump files if OK.

exec

UTL_FILE.FREMOVE (location => 'DATA_PUMP_DIR',filename => 'XXXX.DMP');

On the Windows host in a Command prompt, or from a unix terminal, connect to sqlplus.

sqlplus dbauser/password

Depending on the number of files, edit the PL/SQL blocks below - change the numbers - several

in one loop, and open multiple command prompts or terminal sessions and run several, covering all

files – change the file date/names accordingly, and run it in SQLPlus:

This does files 01 to 09

begin

for i in 1..9

loop

DBMS_FILE_TRANSFER.PUT_FILE(

source_directory_object => 'DATA_PUMP',

source_file_name =>

'SOURCE_SID_FULL_0'||i||'_11JULY.DMP',

destination_directory_object => 'DATA_PUMP_DIR',

destination_file_name =>

'SOURCE_SID_FULL_0'||i||'_11JULY.DMP',

destination_database => 'AWS_RDS_SID'

);

end loop;

end;

/

This does files 10 to 15

begin

DBMS_FILE_TRANSFER.PUT_FILE(

source_directory_object => 'DATA_PUMP',

source_file_name => 'SOURCE_SID_FULL_1'||i||'_11JULY.DMP',

destination_directory_object => 'DATA_PUMP_DIR',

destination_file_name => 'SOURCE_SID_FULL_1'||i||'_11JULY.DMP',

destination_database => 'AWS_RDS_SID'

);

end loop;

end;

/

etc

If it's just a single file

exec

DBMS_FILE_TRANSFER.PUT_FILE(source_directory_object => 'DATA_PUMP',source_file_name

=> 'SOURCE_SID_FULL_01_11JULY.DMP',destination_directory_object =>

'DATA_PUMP_DIR',destination_file_name =>

'SOURCE_SID_FULL_01_11JULY..DMP',destination_database => 'AWS_RDS_SID');

In SQLDeveloper, run the command to see the files as they

are uploaded:

select * from

table(RDSADMIN.RDS_FILE_UTIL.LISTDIR('DATA_PUMP_DIR')) order by mtime desc;

The time it will take to upload depends on the number and size of the files and the link between on prem and AWS.

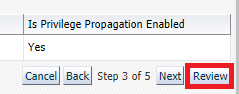

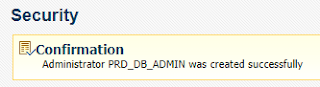

Pre-Create the objects

If this is a new RDS instance, you will need to pre-create

the tablespaces, roles and possibly any directories – check with the user.

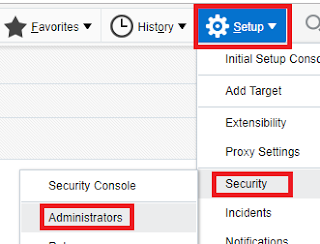

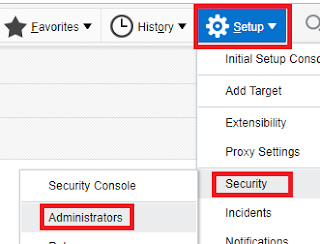

To pre-create the roles and users, use SQLDeveloper – create a connection

to the on-prem in the ‘DBA’ window, select the roles or users from the 'Security' menu item and click on 'SQL' to generate the DDL.

Copy and paste it and run it in the

new environment.

Import the data

There are 2 methods

From SQLDeveloper (this example is from a different

database):

DECLARE

hdnl NUMBER;

BEGIN

hdnl :=

DBMS_DATAPUMP.OPEN( operation => 'IMPORT', job_mode => 'SCHEMA',

job_name=>'IMP_FROM_ON_PREM');

DBMS_DATAPUMP.ADD_FILE(handle

=> hdnl, filename => 'SOURCE_SID_FULL_%u_11JULY.DMP', directory =>

'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_dump_file);

DBMS_DATAPUMP.ADD_FILE(handle

=> hdnl, filename => 'SOURCE_SID_FULL_11JULY.LOG', directory =>

'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_log_file);

DBMS_DATAPUMP.SET_PARAMETER(handle

=> hdnl, name => 'METRICS', value

=> 1);

DBMS_DATAPUMP.SET_PARAMETER(handle

=> hdnl, name =>

'TABLE_EXISTS_ACTION', value => 'REPLACE');

DBMS_DATAPUMP.START_JOB(hdnl);

END;

/

Edit this accordingly - you may want to take out the line in red.

The second method is from a bridge or bastion host. Some places will use these as a gateway between on prem and RDS. This also assumes that the bastion host has at least the Oracle client installed.

You need to check the tnsnames.ora on the bridge host and either identify the correct destination or create a new entry, and update the script below as required.

Run this to perform the import, confirm with the requestor

that the tables will be over written:

sudo su - oracle

nohup impdp

masteruser/"password"@AWS_RDS_SID DUMPFILE=SOURCE_SID_FULL_%u_11JULY.DMP logfile=SOURCE_SID_FULL_11JULY.log parallel=16 full=y directory=DATA_PUMP_DIR PARTITION_OPTIONS=DEPARTITION << use this if the target is SE

and source has partitions

table_exists_action=replace &

Tail the nohup.out, or run this in SQLDeveloper if using that:

SELECT text FROM

table(rdsadmin.rds_file_util.read_text_file('DATA_PUMP_DIR','SOURCE_SID_FULL_11JULY.log'));

You can also check if it is still running with

select * from

SYS.dba_resumable;

Once complete, check the log to make sure it worked OK and

advise the requestor.

That’s it.